On Friday 28th March I uploaded the Beta Code for SQBite to Spyder Forensics Github. This version was a major update from the Alpha code that I released earlier this year, with new features and a completely different output format which is a lot easier to work.

Note: In the initial release there were a few bugs that were identified which I fixed in beta 2, a few more in Beta 3 and another in Beta 4.

Just a reminder: The purpose of this tool is not to reinvent the wheel for forensics on SQLite databases, but to be used for validation, and as an educational tool for my Advanced Applied Database Forensics class that I teach at Spyder Forensics (My employer). There is a lot more information about the records that is output than you would typically see in your main forensic tools. For example, the first 7 columns for a record in the output is not the record content but information about the record and where it is physically located.

Just a reminder: The purpose of this tool is not to reinvent the wheel for forensics on SQLite databases, but to be used for validation, and as an educational tool for my Advanced Applied Database Forensics class that I teach at Spyder Forensics (My employer). There is a lot more information about the records that is output than you would typically see in your main forensic tools. For example, the first 7 columns for a record in the output is not the record content but information about the record and where it is physically located.The latest version of the tool can be downloaded from: SQBite Code

Beta Version Functionality

My focus for this version was performance enhancements and parsing of freelist pages, freeblocks, unallocated space and some analysis functionality.

The tool is super simple to use and in its current state doesn't require any additional python modules.

python SQBite.py

-i [path to maindb]

-w [path to wal (optional)

-o [output path]

-c Record Classification

-s [keyword to Search]

Example Usage: python SQBite.py -i Evidence\history.db -w Evidence\history.db-wal -o DuckDuckGo_History -c -s spyder

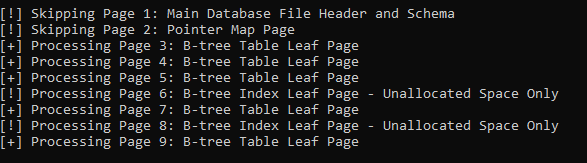

Main Database File Parsing

I decided to use a different approach to handle parsing of the main database file. In the Alpha code I traversed Table B-trees and extracted the records table by table, but now the code parses the main database file page by page which is significantly faster and enables the parsing of other types of pages.

1. Parses the sqlite_master table to identify table and determine the root pages for tables in the database, then walks each b-tree to determine which pages belong to which table.

2. Walks through the main database file page by page and parses the data

In this part the page type is identified and then depending on what type of page different areas of the page are parsed:

- B-tree Table Leaf - Allocated Records, Freeblocks, Unallocated Space

- B-tree Table Interior - Unallocated Space

- B-tree Index Leaf - Unallocated Space

- B-tree Index Interior - Unallocated Space

- Pointer Map Pages - Skipped (No user data)

- Overflow Pages - Skipped (Records with overflow are rebuilt)

At this stage, Freelist trunk pages and Freelist leaf pages are identified (yes there are 2 types of freelist pages). The type of page determines what areas of the page are parsed.

- Freelist Trunk - Unallocated Space

- Freelist Leaf - Parsed based on original page type

As far as parsing of unallocated space and freeblocks, currently the tool essentially returns all printable characters in the areas. I plan to add advanced record recovery in a future release that will rebuild the records but the logic to do that programmatically is beyond my capabilities right now.

In the initial Beta Release, the code would successfully rebuild overflow records, but only if the initial part of the record was not at the end of cell content area. In the Beta 2 version I updated the code to handle overflow records where the initial record was at the end of the cell content area but found out those changes meant overflow records not at the end of the cell content area would throw an error. Whilst the Beta 2 code is fundamentally correct in determining the initial record payload size it appears I am sending too many bytes over to the overflow page function so the 4-byte overflow pointer at the end of the initial record is coming back as an invalid page number. I have yet to figure out why too many bytes are being sent over, but in the Beta 3 code I wrote some code to walk back the initial record payload to find the overflow pointer. This is just a temporary solution but in all my tests it does work.

Write-Ahead Log Parsing (Optional)

The overall process for this capability has not changed from the alpha code but now it parses freeblocks and unallocated space just like the main database file.

I did optimize this code significantly, so it is a lot more efficient. Previously I had a loop that would process the database schema in the main database file for every record if the table could not be established from walking back the WAL file. While not a big deal for small database for larger databases this could take time. Now the code only processes the database schema in main database file once at the beginning. In one of my test databases (photos.sqlite) that has over 1 million records the alpha code took 37 mins to chomp through the database, with the code optimizations here and other parts of the tool it chomps through it is 1.37 minutes and does the basic record recovery.

Here is the basic process:

1. Processes the database schema in the main database schema to determine which pages belongs to which table

2. Walk through the WAL files and parses all Frames

In this part the page type is identified and then depending on what type of page different areas of the page are parsed:

- B-tree Table Leaf - Allocated Records, Freeblocks, Unallocated Space

- B-tree Table Interior - Unallocated Space

- B-tree Index Leaf - Unallocated Space

- B-tree Index Interior - Unallocated Space

- Pointer Map Pages - Skipped (No user data)

- Overflow Pages - Skipped (Records with overflow are rebuilt)

For records that have overflow are currently not parsed completely. The initial payload that is stored on the b-tree leaf page is parsed along with column data defined in the payload header vs the payload body such as integer 1 and 0 and NULL.

Freelist Pages in the write-ahead log are currently not identified, but their contents are parsed and are included in the output, but will not not be flagged accordingly.

3. Determines the Table for each page.

Walks backwards through the WAL frames to identify a table interior page that references the leaf page in that frame. This process is repeated until a root page is identified (Basically traverses the b-tree from bottom to top).

If a table cannot be identified from the WAL, the information extracted from main database schema in step 1 is used to identify tables. This was the part that I optimized to make the process significantly quicker.

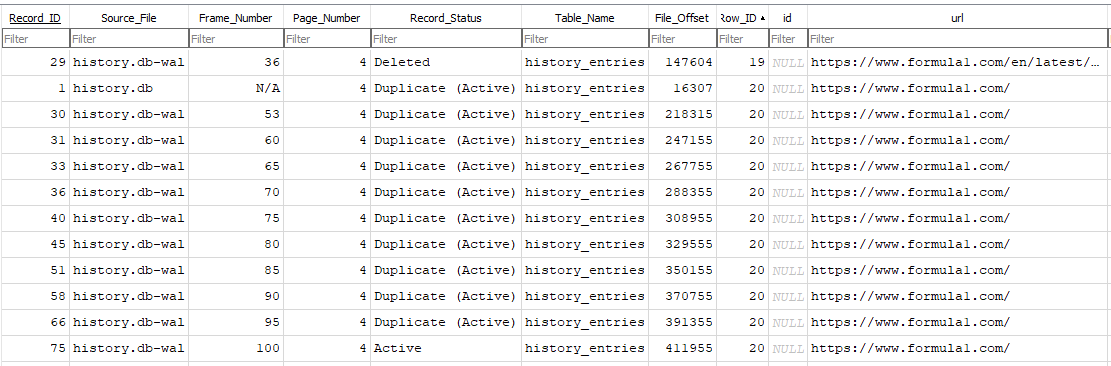

Output SQLite Database

In the alpha code the output was a single csv file for all records without column names. Now an SQLite Database is created in the output folder called SQBite_Extraction.db.

The output process starts by extracting the SQL language for each table in the input database (Done at the physical level). The SQL language is then used to recreate the original tables in the output database. In the initial beta release, I used the table name and their associated column names and made every column TEXT; however, this caused issues with BLOB fields. In Beta 3 the original column type is used so extracted records are inserted correctly.

Note: sqlite internal tables are skipped such as sqlite sequence table.

Along with the original table columns I added additional columns that provide information about the record so it can be validated.

The following extraction columns are added by SQBite

- Record_ID - Custom identifier for each record

- Source_File - Where the record was parsed from

- Frame_Number - For records from the WAL file this will be the Frame Number the record was parsed from otherwise N/A

- Table_Name - The table the record was identified as being part of

- Page_Number - The page number the record was parsed from

- Record_Status - The status of the record i.e allocated, freeblock, unallocated etc..

- File_Offset - The starting physical offset where the record was parsed from

Along with the original tables, additional tables maybe created.

The Recovered_Records table stores the unallocated space and free block content that is extracted from any type of page.

The Freelist table stored allocated records that were extracted from freelist pages. These records are in the same state they were in prior to being add to the freelist so that can be parsed normally. I have some ideas here where I can slot the records back into their original table using column analysis, but for now the table will be “unknown” as in the main database there is nothing physically to say which table it was part of. On that note, a reminder that freelist pages are not currently identified in the wal but they will be parsed normally and inserted into the correct table as the pointers are in journal (wal table identification code).

The unknown table will be created to store records where the table is not known or if there is a mismatched schema between the table the record was being identified as and the record content (I.e too many columns). In testing, records parsed from the wal file are inserted into this table when a b-tree expansion occurs or when a freelist page is reused. In the next release I am going to tweak my code for table identification to handle these scenarios, for example walk forward in the wal to the next commit frame to identify the table before walking backward to identify the table name.

Record Classification (Optional)

If you use the -c switch, the record status will be determined. This feature analyzes the output SQLite database by executing a series of query’s. Without running this feature the record_status is “Allocated”.

This process only runs on tables from the original database so the freelist, recovered_records and unknown tables are skipped.

Note: This process only works when you parse a write-ahead which causes multiple instances of each record. If you just have a main database file, the record_status is just updated from "Allocated" to "Active".

1. First it establishes the highest frame number for a page in a table and updates the record_status column for all allocated records on that frame to “Active”. The main database file doesn’t have frames so pages in the main database file are considered frame 0. This process is similar to querying the original database in an SQLite viewer.

2. Next the process looks at frames that do not contain the active records and compares those records with the active records based on the rowid and column data.

- If the rowid does not match any active records, the record_status is updated to “Deleted”

- If the rowid matches but the column data is different the record_status is updated to “Modified/Reused ID”

- If the rowid matches and there is no difference in the column data the record_status is updated to “Duplicate (Active)”

This feature is experimental and can take a significant amount of time to complete depending on the number of records in the database. In my speed tests, for a database that has less than 20k records in a single table when it is extracted like a chat database it will only take a few minutes to run through; however, with that 1 mil+ record database I mentioned earlier it took 5 hours to complete.

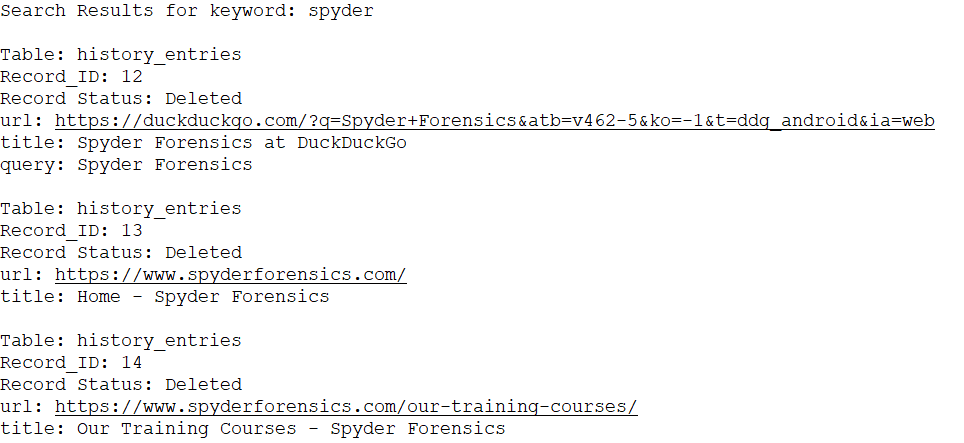

Instasearch (Optional)

If you use the -s switch, you can search for a keyword or phrase in the output database. The idea behind this feature is to give examiners the ability to do a quick triage of the database by searching every table in the database for a keyword and output a text file that provides the table, rowid and the column data that had the hit. Programmatically it is a very simple process and has been really valuable for me when I am researching databases so hopefully everyone else finds it useful.

The search runs through a loop and searches every table

1. Uses PRAGMA table_info on in the output database to get a list of table columns.

2. Searches for the search term in all columns in the table excluding the SQBite populated columns.

3. Writes the table name, Record_ID (SQBite ID), Record_Status, then the column name followed by the column data to a text file in the output folder.

This process will search on every field type including BLOB fields; however, be mindful that this is a text-based search based on user input, and with SQLite, data can be obfuscated such as Base64. Treat this search as a preview and do not solely rely on the results.

The output folder you specify will contain the output SQLite database. The name of the database is called SQBite_Extraction.sqlite.

If you used the InstaSearch feature, the results of the search will output in a txt file called keywordsearch_(keyword).txt

Next Steps

This latest code release is a major improvement from the Alpha code, but there is still a lot of work I want to do before I am comfortable releasing the official version 1 of the tool.

In its current state the tool does a lot, but it is still missing data for example WITHOUT ROWID tables.

Here are some of the capabilities I want to add to make this a more complete SQLite Forensic Solution. Some will go into the version 1 release, but others will be in future releases:

- Freelist Parsing in Write-Ahead Log (v1.0)

- Parsing of WITHOUT ROWID tables (v1.0)

- Reconstruction of Overflow Records in the Write-Ahead Log (v1.0)

- Tool Logging (v1.0)

- Summary Report (v1.0)

- Parsing of Pointer Map Pages (For rebuilding corrupt databases)

- Support for Rollback Journals

- Handling sqlite_internal tables (Currently skipped in the output)

- Parsing of Triggers and Views

- Support for encrypted databases

- Reconstruction of recovered records

- RowID analysis

- Transaction Analysis in the Journal Files

I welcome any feedback on the tool so far and if you have any suggestions for features or find bugs please let me know.

Comments

Post a Comment